Click slide for next, or goto previous, first, last slides or back to thumbnail layout.

Click slide for next, or goto previous, or back to thumbnail layout.

Click slide for next, or goto previous, first, last slides or back to thumbnail layout.

Click slide for next, or goto previous, or back to thumbnail layout.

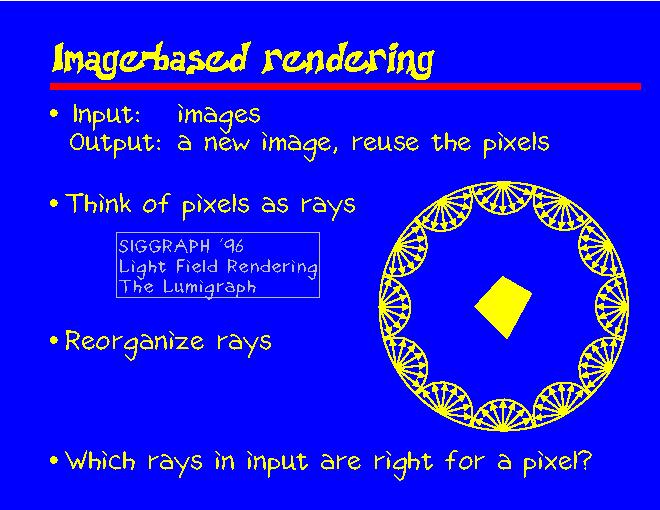

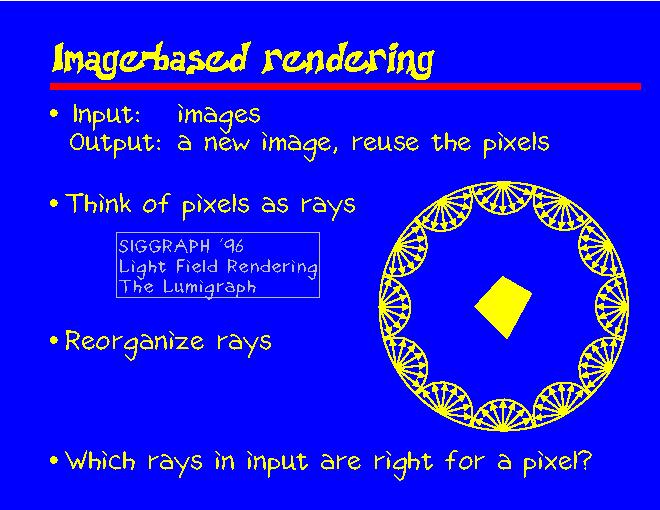

We got most of our ideas for view-dependent texturing from image-based rendering methods. In image-based rendering the idea is to take lots of images of an object, and then create new images using the old ones. There were two papers in SIGGRAPH '96 that both treated the pixels of input images as rays with color. In those papers the rays were reorganized and resampled to a closed surface around the object as illustrated in the slide.

Now we can attempt to create a new image by aiming a virtual camera at the object and finding rays that could be used to paint the pixels of the new image. The question then is how do we find the correct ray, or how do we interpolate between several rays that are almost but not quite what we need.